Data Engineering – the solid basis for effective data utilization

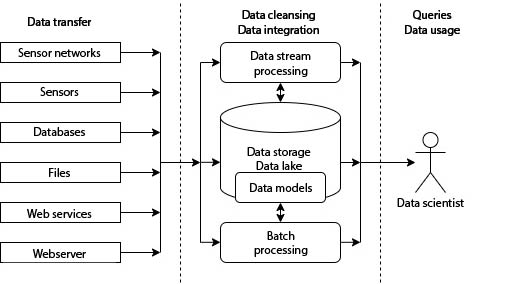

The path of data from sources to the integrated data lake

by DI Paul Heinzlreiter

Data engineering integrates data from a wide variety of sources and makes it usable effectively. This makes it a prerequisite for effective data analysis, machine learning and artificial intelligence, especially in the Big Data area.

Table of contents

- Delimitation

- Data cleansing and integration

- Data storage and data modeling for Big Data

- Use Cases

- Author

In recent years, the topic of extracting information from big data has become increasingly important for more and more businesses in a wide range of economic sectors. Examples of this are historical sales data that can be used to optimize the product range of online stores and sensor data from a production line that can help to increase the quality of products or replace machine parts in good time as part of preventive maintenance. In addition to the direct use of an integrated database in operational practice, it is precisely the topicality of the topics of artificial intelligence (AI) and machine learning (ML) with the promise of being able to continuously optimize a production process, for example, that represents a strong motivation.

However, when the process of information acquisition is considered in its entirety, it quickly becomes clear that AI and ML represent only the proverbial tip of the iceberg. These methods require large amounts of consistent and complete data sets, especially for the steps of model training and model validation. Such data sets can be generated, for example, by sensor networks or by sensors in production.

The transfer, storage and processing of this data in order to make it effectively usable is the central task of data engineering. This is independent of whether the goal is company-wide and effective reporting, data science to improve the production process, or AI. A solid data basis is necessary in all cases. The integration of data into a common database can additionally form a reliable ground truth for a wide variety of use cases in the company: For effective day-to-day business, for strategic planning based on solid data and facts, or for model training in the field of AI.

Delimitation

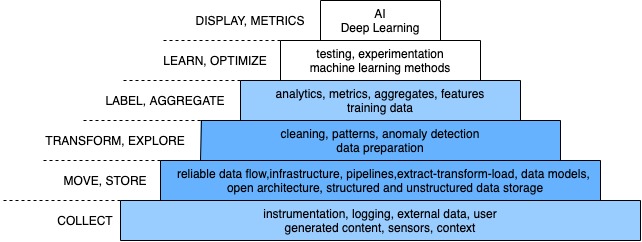

The overarching goal is thus to increase the quality and usability of the available data and thus essentially follows the data science hierarchy of needs, which describes the stages from raw data to AI. Analogous to Maslow’s hierarchy of needs, the lower levels of the pyramid represent a necessary prerequisite for the steps that build on them.

Data Science Hierarchy of Needs

At the top of the pyramid are the activities of Data Science, which are based on integrated and cleansed data sets. These can then be used to train ML models, for example. The levels colored in blue represent the data engineering activities, with the focus on the move, store and transform, explore levels. While the levels above with AI, deep learning, and ML are the domain of data scientists, activities such as data labeling and data aggregation are borderline areas that can be performed by data scientists or data engineers depending on the exact task and personnel availability.

The activities of data collection at the base of the pyramid fall only partially within the scope of data engineering in that it usually takes the data at a defined interface – via files, external databases or a network protocol. This is also due to the fact that data engineering is a sub-field of computer science or software engineering, and thus does not usually deal with topics such as building or operating data collection hardware such as sensors.

Data cleansing and integration

As part of the data engineering process, the raw data is prepared over several steps after transfer and finally stored in the data store in a consistent and fully prepared form:

- Data cleanup

- Data integration

- Data transformation

These forms of data transformations are carried out step by step and sequentially. The technical implementation can take the form of data stream processing – the consecutive processing of many small data packets – or batch processing for the entire data set simultaneously. An appropriately dimensioned data store – the data lake – makes it possible to persist the data in various states during its processing.

Data cleansing includes, for example, checking the read-in data lines for completeness and syntactic correctness. Data errors such as incorrect sensor values can also be detected by predefined rules in this step.

If these criteria are violated, the following options are available, depending on the application:

- Improve raw data quality: If the raw data can be subsequently delivered in improved quality, these replace the faulty data

- Discard data: Incorrect data can be discarded, for example, if the data set is to be used for training purposes in ML and sufficient correct data is available.

- Automatically correct errors during import: For example, if the data can be obtained from an additional data source, errors can be corrected during data integration.

In practice, discarding the incorrect data is the easiest solution to implement. However, if every single data point can have relevance for the planned evaluations, erroneous data must be corrected if possible. This case can occur, for example, during quality assessment in production, when the production data for a defective workpiece is incorrect due to a sensor error. The correction of data can either be done manually by domain experts, or the correct data can be supplied at a later point in time.

The involvement of domain experts is central here, because on the one hand they know the criteria for the correctness of the data, such as sensor values, and on the other hand they know how to deal with incorrect or incomplete data.

Data integration deals with the automated linking of data from different data sources. Depending on the application domains and the type of data, data linking can be done by different methods such as:

- Unique identifiers, analog to foreign keys in relational model

- Geographical or temporal proximity

- Domain-specific interrelationships such as sequences in manufacturing processes or in production lines

After the data cleansing and data integration steps, data engineers can provide a dataset suitable for further use by data scientists. The data transformation step mentioned above refers to ongoing adjustments to the data model to improve the performance of queries by Data Scientists.

Data storage and data modeling for Big Data

The cleaned and integrated data can be stored in a suitable data storage solution. In the application area of Industry 4.0, for example, data is generated continuously by sensors, which often leads to data volumes in the terabyte range within months. Such data volumes are often no longer manageable with a classic relational database. Although there are scalable databases available on the market that use the relational model, these are not an option for many implementation projects – especially in the SME sector – due to their high licensing costs.

As an alternative, horizontally scalable NoSQL systems are available, the term being an abbreviation for “Not only SQL“. This term covers data stores that use non-relational data models. The property of horizontal scalability refers to the possibility of expanding such systems by integrating additional hardware for basically unlimited data volumes. Typical representatives of NoSQL systems are also often subject to liberal licensing models such as the Apache license and can thus also be used commercially without license costs. In addition, these systems do not place any special requirements on the hardware used, which further reduces the acquisition costs of such systems. Thus, NoSQL systems such as Apache Hadoop and related technologies represent a cost-effective way of executing queries on data volumes in the terabyte range.

Particularly in the Big Data area, the selection of a suitable NoSQL database and a suitable data model is of central importance because both aspects are central to the performance of the overall system. This refers both to the input of data and to queries against the NoSQL system.

The selection of the technology to be used as well as the data model design is clearly driven by the system requirements:

- What data volumes and data rates need to be imported?

- Which queries and evaluations are to be performed with the data?

- What are the performance requirements for the queries? Is it a real-time system?

The central question is, for example, whether the system should only support fixed queries or – for example, using SQL – allow flexible queries.

In the context of technology selection, a distinction can be made, for example, as to whether data is always accessed via a known key or whether queries are also made on the values of other attributes. In the first case, a system with the semantics of a distributed hash map, such as Apache HBase, is suitable, while in the other case, for example, an in-memory analysis solution such as Apache Spark is suitable. If the use of the data is primarily aimed at the links between data, the use of a graph database should be considered.

In a Big Data system, data is stored denormalized for performance reasons, i.e., all data relevant to a query result should be stored together. The reason for this is that performing joins is very resource-intensive and time-consuming. Therefore, the planned queries are central to the design of the data model. For example, the attributes that mainly appear as parameters in the queries should be used as key attributes. This is also the reason why the data model often has to be extended when new queries are added to ensure their effective execution, and thus data engineering activities are continuously required even after the data has been introduced.

With its expertise in the field of open source NoSQL databases built up over more than ten years, RISC Software GmbH represents a reliable consulting and implementation partner for the introduction or expansion of a solid database in your company, regardless of the area of application.

Use Cases for Data Engineering

Use Case 1: Corporate data integration

Data from different sources can be merged and used effectively in an integrated data model

Use Case 2: Data preparation for AI / ML

Data engineering methods can be used to provide a large amount of consistent and complete training data for AI and ML

Use Case 3: Transformation of the data model to improve data understanding

Data engineering can significantly increase data understanding by better adapting the data model to the use case. An example could be the introduction of a graph database.

Use Case 4: Improved (faster) data usage

Data engineering can help significantly speed up interactive queries by adapting the data storage and data model.

Sources

[1] Blasch, Erik & Sung, James & Nguyen, Tao & Daniel, Chandra & Mason, Alisa. (2019). Artificial Intelligence Strategies for National Security and Safety Standards.

[2] Abraham Maslow: A Theory of Human Motivation. In Psychological Review. 1943, Vol. 50 #4, Seite 370–396.

Contact

Author

DI Paul Heinzlreiter

Senior Data Engineer