Data quality: From information flow to information content

why clean data (quality) management pays off

by Sandra Wartner, MSc

Making decisions is not always easy – especially when they are relevant to the fundamental direction of the company and can thus influence a far-reaching corporate structure. This makes it all the more important to know as many influencing factors as possible in the decision-making process, to quantify facts and to incorporate them directly (instead of making assumptions) in order to minimize potential risks and achieve continuous improvements in the corporate strategy. A possible quick-win for companies can be derived from corporate data: future-oriented data quality management – a process that unfortunately often receives far too little attention. Why the existence of large data streams is usually not enough, what decisive role the state of the stored data plays in data analysis and decision making, how to recognize good data quality and why this can also become important for your company, we explain here.

Table of contents

- What is the cost of bad data?

- Data basis and data quality – What‘s behind it?

- How do you recognize poor data quality and how can these data deficiencies arise in the first place

- Data as fuel for machine learning models

- Data quality as a success factor

- Sources

- Author

What is the cost of bad data?

When shopping at the supermarket, we look for the organic seal of approval and product regionality; when buying new clothing, the material should be made from renewable raw materials and under no circumstances produced by child labor; and the electricity provider is selected according to criteria such as cleanliness and transparency – because we know what influence our decisions can have. So why not stay true to the principle of quality over quantity when it comes to data management?

In the age of Big Data, floods of information are generated every second, often serving as the basis for business decisions. According to a study by MIT [1], making the wrong decisions can cost up to 25% of sales. In addition to the financial loss, unnecessarily high resource input or additional effort is required to correct the resulting errors and correct the data, and the proportion of satisfied customers as well as the trust in the value of the data decreases. Google is not the only company that has had to deal with the drastic consequences of errors in its data, for example with its Google Maps product [2]. Address information at the wrong location even led to a demolition company accidentally razing the wrong house to the ground; incorrectly lowered kilometer information to the navigation destination via non-existent roads left drivers stranded in the desert or sights suddenly appeared in the wrong places. Also NASA had to watch on 23.09.1999 how the Mars Climate Orbiter and with it more than 120 million $ burned up during the approach to Mars – the reason: a unit error [3]. Even if the effects of poor data quality may not be quite as far-reaching as those of the major players, the topic of data quality nevertheless affects every company.

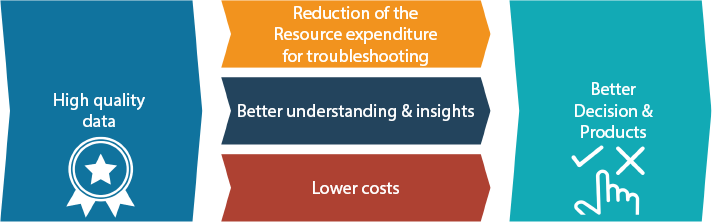

From a business perspective, data quality is not an IT problem, but a business problem. This usually results from the fact that business professionals are not aware of the importance of data quality, or are not aware of it enough, and that data quality management is also successively weak or missing altogether. Linking data quality practices with business requirements helps to identify and eliminate the causes of quality problems, to reduce error rates and costs, and ultimately to make better decisions.

Figure 1: From data quality to sustainable decisions and products

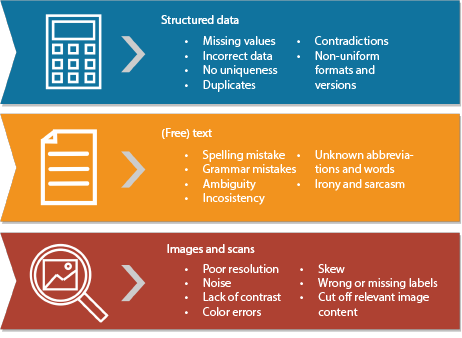

Despite the above criteria, it is not easy to describe good or bad data quality on the basis of more concrete characteristics, since data exists in a wide variety of structures that differ greatly in their properties. The collected data stock in the company is composed of different data depending on the degree of structuring:

- Structured data is information that follows a predefined format or structure (usually in tabular form) and may even be sorted systematically. This makes them particularly suitable for search queries for specific parts of information such as a specific date, postal code or name.

- Unstructured data, on the other hand, is present in a non-normalized, unidentifiable data structure, making it difficult to process and analyze. This includes, for example, images, audio files, videos or text.

- Semi-structured data follows a basic structure that includes both structured and unstructured data. A classic example of this is e-mails, for which, among other things, the sender, recipient, and subject must be specified in the message header, but the content of the message consists of arbitrary, unstructured text.

Data basis and data quality – What‘s behind it?

There are already many definitions of the term data quality, but a general statement about it can only be made to a limited extent, since good data quality is usually defined on a domain-specific basis. A large data set alone (quantity) is no indication that the data is valuable. The decisive factor for the actual usefulness of the data in the company is above all whether it correctly reflects reality (quality) and whether the data is suitable for the intended use case.

There are various general approaches and guidelines for assessing the quality of data. Often, good data quality is understood very narrowly as the correctness of content, ignoring other important aspects such as trustworthiness, availability, or usability. For example, Cai and Zhu (2015) [4] define the data quality criteria Availability, Relevance, Usability, Reliability, and Presentation Quality shown in Figure 2. In the following, we discuss some relevant points for the implementation of data-driven projects based on these criteria.

Figure 2: Data quality criteria according to Cai and Zhu (2015).

- Relevance: In data analysis projects, it is particularly important at the beginning to consciously consider whether the data on which the project is to be based is suitable for the desired use case. In AI projects, it is therefore always important to be able to answer the following question with “yes“: Is the information needed to answer the question even available in its entirety in the data? If even domain experts cannot identify this information in the data, how is an AI supposed to learn the connections? After all, machine learning algorithms only work with the data provided to them and cannot generate or utilize any information that is not contained in the data.

- Usability: Metadata is often needed to interpret data correctly and thus to be able to use it at all. Examples of this are coding, origin, or even time stamps. The origin can also provide information about the credibility of the data, for example. If the source of the data is not very trustworthy or if the data originates from human input, it may be worthwhile to check it carefully. Depending on the content of the data, good documentation is also important – if codes are used, for example, it may be important to know what they stand for in order to interpret them, or additional information may be needed to be able to read date values, time stamps or similar correctly.

- Reliability: Probably the most important aspect in the evaluation of data and its quality is how correct and reliable it is. The decisive factor is whether the data is comprehensible, whether it is complete and whether there are any contradictions in it – simply whether the information it contains is correct. If you already have the feeling that you do not trust your own data and its accuracy, you will not trust analysis results or AI models trained on them either. In this context, accuracy is often a decisive factor: for one question, roughly rounded values may be sufficient, while in another case accuracy to several decimal places is essential.

- Presentation quality: Logically, data must be able to be “processed“ not only by computers, but above all by people. In many cases, therefore, they must be well structured or be able to be processed so that they are also readable and understandable for us humans.

- Availability: At the latest, if one wants to actively use certain amounts of data and, for example, integrate them into an (AI) system, it must also be clarified who may access this data and when, and how this access is (technically) enabled. Above all, this is an important factor for the trustworthiness of resulting AI systems, this is an important factor. For real-time systems, timeliness is also crucial. After all, if you want to process data in real time (e.g., for monitoring production facilities), then it must be possible to access it quickly and it must be reliable.

How do you recognize poor data quality and how can these data deficiencies arise in the first place

Poor data quality is usually not as inconspicuous as one might think. A closer look quickly reveals a wide variety of deficiencies, depending on the degree to which the data is structured. Let‘s be honest – are you already familiar with some of the cases shown in Figure 3? If not, take the opportunity and start looking for these conflict generators, because you will most likely find some of them. These data can take many shapes in practical application and manifest themselves in various problematic issues such as image damage or legal consequences, among others (Figure 4 shows just a few of the negative effects). The costs of poor data quality can also be far-reaching, as we have already made clear in “What do bad data cost?“. But how can such problems arise in the first place?

Figure 3: Examples of poor data quality by

Degree of structuring of the data

Figure 4: Negative examples from practice

The root causes often lie in the lack of data management responsibilities or data quality management in the first place, but technical challenges can also cause problems. These errors often creep in over time. Particularly prone to errors are different data collection processes and, subsequently, the merging of data from a wide variety of systems or databases. Human input also produces errors (e.g. typing errors, confusion of input fields). Another problem lies in data aging: Especially when changes in data collection or recording take place (e.g. missing sensor data during machine changeover, lack of accuracy, too small/too large sampling rate, lack of know-how, changing requirements on the database), problems occur. Further risk factors are the often missing documentation and the faulty versioning of the data.

In practice, perfect data quality is usually a utopian notion, which is also shaped by many influencing factors that cannot be controlled or are difficult to control. However, this should not take away anyone‘s hope, because: Most of the time, even small measures achieve a big effect.

Data as fuel for machine learning models

It is not only in classical data analysis that special attention should be paid to the data in order to obtain the maximum informative value of the results. Especially in the field of artificial intelligence (AI), the available database plays a decisive role and can help a project to a successful conclusion or condemn it to failure. By using AI – specifically machine learning (ML) – frequently repetitive processes can be intelligently automated. Examples are the search for similar data, the derivation of patterns or the detection of outliers or anomalies. It is also essential to have a good understanding of data (domain expertise) in order to identify potential influencing factors and to be able to control them. Well-known principles such as “decisions are no better than the data on which they‘re based“ and the classic GIGO idea (garbage in, garbage out) clearly underline how essential the necessary database is for the learning process of ML models. Only if the data basis is representative and reflects reality as truthfully as possible can the model learn to generalize and successively make the right decisions.

Data quality as a success factor

Data quality should definitely be the top priority for data-based work. Furthermore, the awareness of the relevance of good data quality should be created or sharpened in order to be able to achieve positive effects throughout the company, to reduce costs and to be able to use freed-up resources more efficiently for the really important activities. Our conclusion: Good data quality management saves more than it costs, enables the use of new methods and technologies and helps to make sustainable decisions.

Sources

1 https://sloanreview.mit.edu/article/seizing-opportunity-in-data-quality/

2 https://www.googlewatchblog.de/2019/07/google-maps-fehler-katastrophen/

3 http://edition.cnn.com/TECH/space/9909/30/mars.metric/

4 Cai and Zhu (2015): The Challenges of Data Quality and Data Quality Assessment in the Big Data Era

Contact

Author

Sandra Wartner, MSc

Data Scientist