Transformer models conquer Natural Language Processing

How to make the most of pre-trained models like Google T5

by Sandra Wartner, MSc

Artificial intelligence (AI) has become an integral part of our everyday lives. Every day we are in contact with numerous systems that are based on AI – even if we are not always aware of it. However, it becomes noticeable when machines communicate with us in our language, as is the case with voice assistants.

Considerable progress has been made in AI-driven understanding of natural language in recent years, among other things through so-called Transformer models – including the T5 architecture developed by Google, which is presented in this article.

Table of contents

- What is Google T5?

- Parlez-vouz français? Oui!

- How do I use T5 for my use case?

- Conclusion

- Sources

- Author

The field of Natural Language Processing (NLP) deals with the understanding of natural, human language. An introduction to the topic is provided by the specialist article in the first Insights magazine “OK Google: What is Natural Language Processing?

Since the machine construction of natural language understanding requires huge amounts of text data of several gigabytes (e.g., the entire text from Wikipedia), the training of NLP systems from scratch involves considerable costs (up to six-digit euro amounts or more) and time[1] until an acceptable quality of the results can be achieved. Therefore, numerous researchers as well as large companies like Google or Facebook make pre-trained language models publicly available. Since these so-called base models have already learned a basic understanding of language, other researchers can build on these models and adapt them for a specific project, extend them, and in turn share them with the NLP community.

This concept is called transfer learning. Here, models are trained with huge amounts of data to gain a general understanding of basic concepts (in this case, natural language) and then trained with more specific data to perform a concrete task. This not only allows reuse of models, but also reduces the size of the database needed for a concrete task.

When only limited data is available, zero-shot learning can be used. Here, a model is made to perform a task for which it has not been explicitly trained. One-shot and few-shot models also work according to this principle. Thus, models with no or only little data can be trimmed to a specific task. Unfortunately, these approaches do not completely eliminate the need to collect training data in very many cases: first, these zero-shot models often only serve as prototypes or baseline models, since they often cannot generalize sufficiently well (i.e., decreasing quality of results with new, unseen data) and are very susceptible to errors in the (training) data. Second, additional evaluation data is needed to quantify the quality of zero-shot models in the first place and to make an assessment of the value added by their use.

NLP in recent years has been the development of so-called transformer models. This is a special neural network architecture that uses so-called attention mechanisms and replaces the previously prevailing recurrent neural networks (RNN) with deep feed-forward networks. This novel architecture, developed in 2017, was able to largely eliminate the weaknesses of the previous models. Among other things, it enables a (better) understanding about the context of certain words and facilitates the performant handling of larger data sets. Probably the best known example of a group of Transformer models is BERT[2] (Bidirectional Encoder Representations from Transformers). The basic BERT models can be extended by customized extensions, which are trained on the desired task (e.g. classification of sentences on negative, neutral or positive sentiment). Systems based on the BERT architecture have achieved record-breaking results in numerous tasks since its publication in 2018 and are now an integral part of Google searches.

What is Google T5?

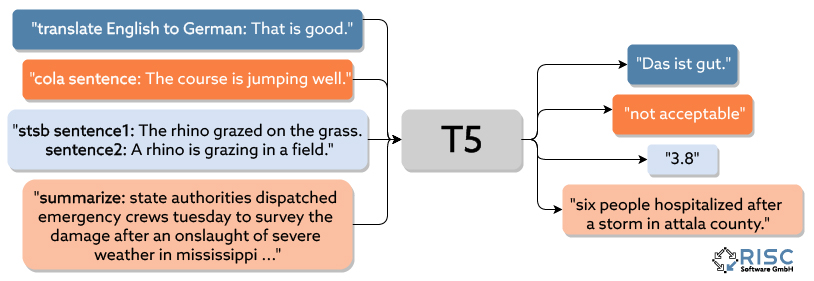

The T5 architecture developed by Google works very similarly to BERT, but has some differences. The eponymous Text-To-Text principle means that for T5 models, input and output consist of pure text data. This allows training the T5 models on arbitrary tasks without having to adapt the model structure itself to this task. Thus, any problem that can be formulated as text input to text output can be handled by T5. This includes, for example, classifying texts, summarizing a long text, or answering questions about the content of a text. Another unique feature of the T5 architecture is the ability to use a single model to solve several different tasks, as shown in Figure 1. For example, the pre-trained T5 models have already been trained on 17 different tasks. Among these, the task of answering questions about a given text is a particular strength of T5. If the model is provided with the entire Wikipedia article on the history of France, for example, it can successfully return the correct answer “Louis XIV“ to the question “Who became King of France in 1643?“

T5 has already achieved impressive results in various areas, but as in all areas of artificial intelligence, it is not a solution to every problem (see also No-Free-Lunch-Theorem). A common approach to determine the best solution to a problem is to test multiple model architectures for a task and then compare the results.

Figure 1: T5 model

Parlez-vous français? Oui!

An extension of the T5 models are the mT5 models[4], which have been trained on huge amounts of texts in a total of 108 different languages (as of 2022-01). This allows a single model to solve tasks in different languages. However, there is a clear difference in the quality of the results in different languages – the less a language is present in the training data, the worse the results achieved in it.

It is also particularly useful that languages for which less data is available can benefit from training data in other languages. This is especially helpful in data acquisition, since English-language texts are often available in larger quantities than texts in other languages. Thus, even if tasks are to be solved in only one language, mT5 models can still be helpful if there is not enough training data available in the target language. However, the results obtained tend to be worse than when training only on the target language with a similar amount of total data.

How do I use T5 for my use case?

The first step should be to clarify some general, important questions about the specific use case:

- In which languages is the problem to be solved?

- Where does the data needed for the training come from? Are they suitable for my use case?

- How can I check the quality of the model?

- Is test data available?

- What resources (computing power, time, financial resources, etc.) are available for training or fine-tuning?

- Which models are applicable for my use case?

- …

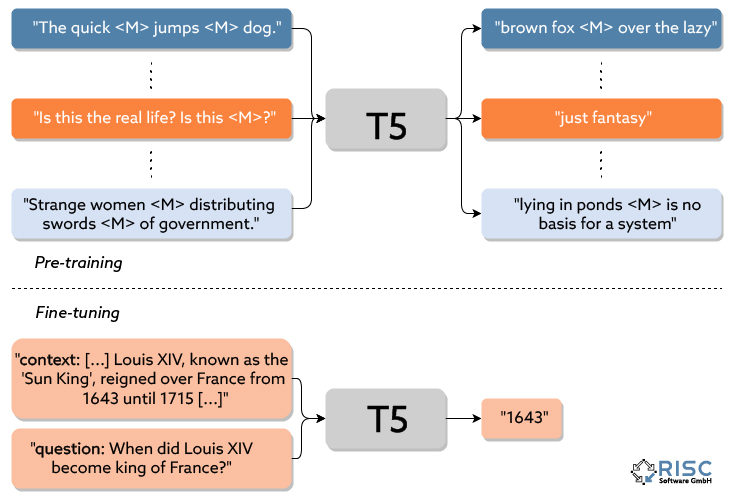

Figure 2: Pre-training and fine-tuning

For the potential use of T5, it must additionally be evaluated whether the use case can be formulated as a text-to-text task.

Next, a suitable pre-trained T5 model is selected. One of the best and most well-known sources for this is the Hugging Face platform, which provides a variety of pre-trained state-of-the-art models and also datasets as open-source solutions for the public. These are often available in a variety of sizes, and a balance must be struck between the computational power or time required and the quality of the model. While larger models generally provide better results, they also place significantly greater demands on resources, so the largest model is not always automatically the best choice.

Now it is time for the so-called fine-tuning. The pre-trained model (which at this point already has a basic understanding of language) is trained to solve a concrete task as shown in Figure 2. For this, training data is necessary, which shows the model which input should lead to which output. Not only the quantity, but also the quality of the data is crucial.

Challenges include the choice of the concrete base model, the acquisition and quality control of the training data, and the preparation of the data in a format that can be read by the model. Additional difficulties arise, for example, with longer text sequences, since Transformer models have a limit in terms of text length.

RISC Software GmbH has been researching the use of T5 models for some time. For example, a system for recognizing and assigning proper names in texts (Named Entity Recognition, or NER) could be extended and improved using T5. For this purpose, the already pre-trained ability of T5 to answer questions about a given text is used. Thus, the T5 model can answer questions such as “Which person is involved?“ or “Which company is involved?“ and thus assist the NER system.

Conclusion

Due to its design, the T5 architecture is suitable for a wide variety of different tasks (or combination of these) and has already been able to deliver impressive results in numerous application areas. It remains extremely exciting to see how these technologies will develop in the future and what successes can still be achieved with them.

Sources

[1] Sharir, Or, Barak Peleg, and Yoav Shoham. “The cost of training nlp models: A concise overview.” arXiv preprint arXiv:2004.08900 (2020).

[2] Devlin, Jacob, et al. “Bert: Pre-training of deep bidirectional transformers for language understanding.” arXiv preprint arXiv:1810.04805 (2018).

[3] Raffel, Colin, et al. “Exploring the limits of transfer learning with a unified text-to-text transformer.” arXiv preprint arXiv:1910.10683 (2019).

[4] Xue, Linting, et al. “mT5: A massively multilingual pre-trained text-to-text transformer.” arXiv preprint arXiv:2010.11934 (2020).

[5] Adam Roberts and Colin Raffel. “Exploring Transfer Learning with T5: the Text-To-Text Transfer Transformer.” https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html

Contact

Author

Sandra Wartner, MSc

Data Scientist