AI-supported modeling: revolution in oral surgery

by Bertram Sabrowsky-Hirsch, MSc

The combination of Artificial Intelligence (AI) and medical imaging is revolutionizing maxillofacial surgery. The development of automated modeling methods allows personalized patient models to be created more efficiently and precisely. This enables improved diagnoses, customized treatments, and faster surgical preparations.

- Automatic modeling of patients

- Pipeline-supported development of modeling methods

- Collaboration with CADS GmbH

- Source Directory

- Author

- Read More

Automatic Modeling of Patients

Patient-specific modeling has significant potential in diagnosis and treatment and represents a crucial step toward personalized medicine. In maxillofacial surgery, 3D models of the examined anatomy not only provide an intuitive visualization but also serve as the basis for surgical planning and the design of patient-specific implants. In practice, medical image data are processed by modeling experts using software tools based on medical imaging data – a time-consuming and costly process. Advances in AI-based annotation now make comprehensive solutions technically feasible. However, their application outside research remains limited due to the time-consuming preparation of suitable datasets for method training. In a collaboration between RISC Software GmbH and CADS GmbH, the development of automatic modeling methods was efficiently implemented using an AI-supported pipeline.

Pipeline-Supported Development of Modeling Methods

As part of its research activities, the Medical Informatics Unit of RISC Software GmbH is developing an AI-supported pipeline for modeling medical datasets. Unlike comparable technologies, such as the AI platform MONAI [1], this pipeline also supports aspects of method development starting with the review, selection, and stratification of the data base and allowing the chaining of various AI-based and classical image processing methods into workflows applicable to both individual patient data and complete datasets. Partial results, such as the training of AI methods or the calculation of atlas datasets, are standalone processes just like the final modeling method for integration into end applications, making it data-agnostic. This approach leads to maximum reusability of the workflows.

Figure 1: The flexible module concept of the pipeline allows processing steps to be arbitrarily chained and embedded into workflows, which can in turn be integrated into overarching processes.

The pipeline has already been successfully employed in several research projects, such as the automatic modeling of aneurysm patients for the MEDUSA surgery simulator [2]. These projects enable the automated creation of complex patient models, supporting further anatomy visualization, automated feature calculation, phantom printing, and hybrid surgical simulation. By integrating modern AI methods like the nnU-Net framework [3], the pipeline achieves state-of-the-art results.

Figure 2: Modeling of aneurysm patients in the MEDUSA research project.

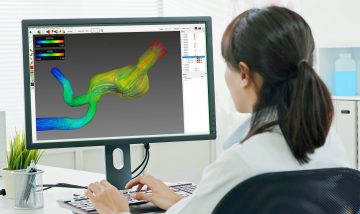

Figure 3: Modeling of patients in facial and jaw surgery from the collaboration with CADS GmbH.

Collaboration with CADS GmbH

In the context of the collaboration, the AI-supported pipeline was used for the annotation process, as well as the training and validation of AI methods. Specifically, the annotation process benefited from the automated preparation of image data for external service providers and subsequent validation and integration of the created annotations into the database. Training, validation, and statistical evaluation of AI methods were also fully automated and can be easily applied to the growing database in the future. The results were presented at AAPR 2023 [4] and convinced the medical experts of the project partner. The pipeline was eventually integrated into an annotation platform by CADS GmbH, enabling medical experts to automatically generate patient-specific models from image data in the future. Moreover, the pipeline supports the ongoing expansion of the database with new annotations and the subsequent optimization of AI methods, allowing for continuous improvement of AI methods and the inclusion of additional anatomical structures in the database. The collaboration continuously expands the pipeline and applies it to model further anatomical structures. A particular focus is on the automatic assessment and selection of image data based on quality criteria regarding their suitability for modeling, making the annotation platform even more efficient for its users.

Figure 4: Examples of automatically modeled datasets of patients in facial and jaw surgery.

Source Directory

- Cardoso, M. Jorge, et al. “Monai: An open-source framework for deep learning in healthcare.” arXiv preprint arXiv:2211.02701 (2022).

- Forschungsprojekt Medusa, medusa.health/de

- Isensee, Fabian, et al. “nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation.” Nature methods 18.2 (2021): 203-211.

- Sabrowsky-Hirsch, B., et al. “Automatic Anatomical Annotation of CBCT Scans for Maxillofacial Prosthetics.” Proceedings of the Joint Austrian Computer Vision and Robotics Workshop 2023, Verlag der TU Graz, 2023

Contact

Author

Bertram Sabrowsky-Hirsch, MSc

Researcher & Developer