Reengineering: Engineering solution shines in new splendour through web porting

Why do engineering solutions also need a web version?

by Martin Hochstrasser MSc, DI (FH)Josef Jank and DI (FH) Alexander Leutgeb

A well-known proverb says: “Nothing is as constant as change” (Heraclitus of Ephesus). Of course, this also applies to software and especially to its user interfaces. These applications are increasingly shifting towards web technologies because this makes them available to a wide range of users on all platforms without installation. Even successful desktop applications have to face this pressure to modernise, otherwise they run the risk of being discontinued and losing resources for further development. With older software systems, such new requirements often result in a complete redevelopment of the software. Many see it as an opportunity to free themselves from old burdens and switch to their preferred platform and programming language. In the initial euphoria, the effort and risk are often underestimated and many of these projects far exceed the initially assumed costs.

For a technically and economically successful implementation of such projects, a well-considered overall strategy is therefore important. The goal should be a minimally invasive solution where only those parts are changed that are actually affected. For all other parts, the following applies: “Never change a running system”. This avoids unnecessary effort and sources of error. The customers receive an operational software at an early stage, can give continuous feedback based on it and thus steer the further development in the right direction. In addition, the phase of parallel maintenance of the existing software and new development is kept short. Through modernisation or reengineering, the software is technologically fitter for the future and through the potentially broader user group, future investments can be better argued. Through this reengineering approach and an agile approach, the effort can be kept low and a good cost-benefit ratio can be achieved.

Table of contents

- Why do engineering solutions also need a web version?

- Use-Case: Reengineering of the desktop application HOTINT for multi-body simulation

- Achieving the highest possible customer benefit in just three months

- Possible solutions

- Implementation concept

- Technical realisation and implementation

- Summary / Outlook

- Authors

Use-Case: Reengineering of the desktop application HOTINT for multi-body simulation

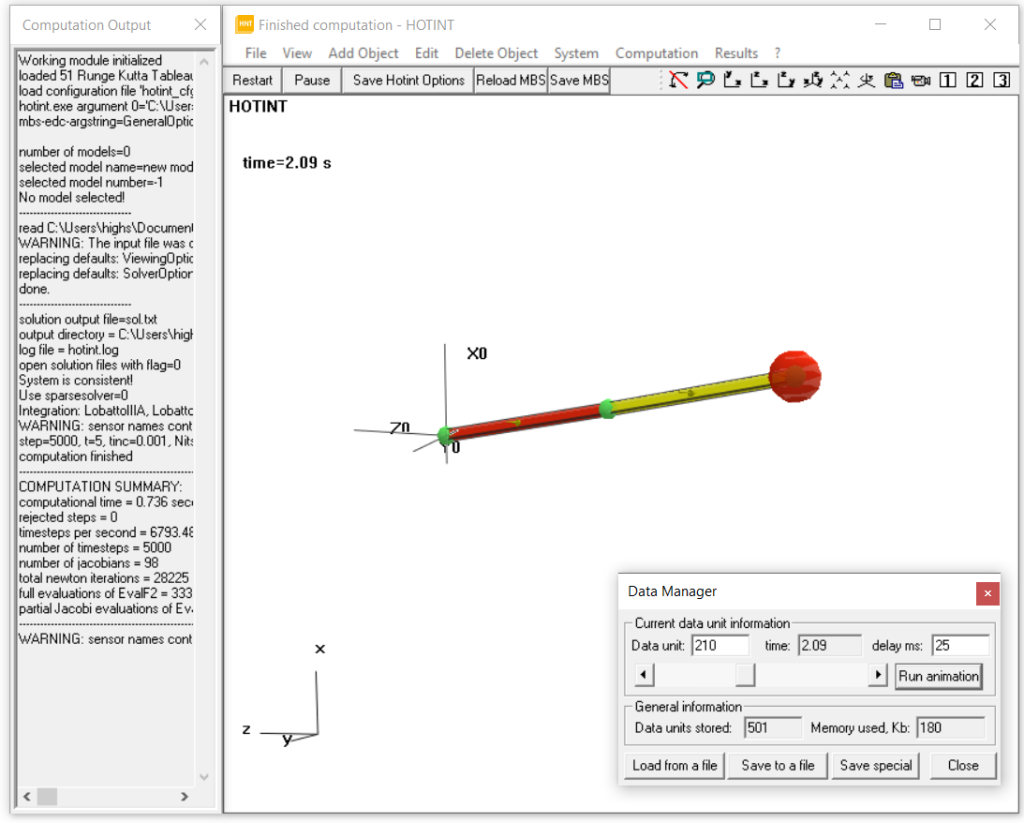

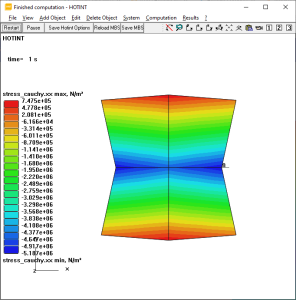

HOTINT is a free software package for modelling, simulation and optimisation of mechatronic systems, especially flexible multibody systems. It includes solvers for static, dynamic and modal analyses, a modular object-oriented C++ system framework, a comprehensive element library and a graphical user interface with tools for visualisation and post-processing. HOTINT has been continuously developed for more than 25 years and is currently being further developed by the Linz Center of Mechatronics GmbH (LCM) within the framework of several scientific and industrial projects in both the open and closed source areas. The focus today is on the modelling, simulation and optimisation of complex mechatronic systems (incl. control), i.e. general mechanical systems in combination with electrical, magnetic or hydraulic components (e.g. sensors and actuators). Parameterised model setups can be implemented via the HOTINT scripting language or directly within the C++ modelling framework. Other important features are interface support, e.g. a versatile TCP/IP interface that allows coupling with other simulation tools (e.g. co-simulation with MATLAB/Simulink or coupling with a particle simulator to analyse fluid-structure and particle-structure interaction), and integration with the optimisation framework SyMSpace. The software is realised as a C++ application and uses Microsoft’s MFC to implement the user interface. The OpenGL programming interface is used for the visualisation of the 3D representation.

Screenshot 1: old desktop application HOTINT

Achieving the highest possible customer benefit in just three months

Already in the course of the first meetings, the framework conditions and primary goals were defined together with LCM. In general, the overriding goal was to realise a web interface for the existing desktop application. However, it was relatively unclear to everyone involved whether the envisaged solution ideas could be implemented within the planned timeframe of 3 months or whether unforeseeable limits or problems would be encountered. Therefore, a precise definition of the final results was dispensed with, but the risk was limited by timeboxing (defined incremental product development with a fixed period of time). For the team, the task was to generate as much customer benefit as possible in the time available – ideally, to make the current range of functions completely available in the web client. In keeping with the agile approach, the risk was also minimised by two-week sprints with joint reviews and the planning for the subsequent sprints based on these reviews. The first sprint of each phase had similarities with spikes, where the basic feasibility and the effort for a technical story is determined. Based on this, the concrete realisation was driven forward in the following sprints.

In hindsight, this lightweight process worked well for the project, but this does not necessarily apply to other projects. Of course, if the clients have little experience in agile and software development, much more persuasion or a more formal process may be needed. In the current case, the well-coordinated team already had many years of experience in the field of 3D visualisation and was thus able to make the right decisions based on experience. Especially with technically demanding topics, this aspect is more important than a process that is as well thought out as possible (without wanting to minimise its importance). For further information on the topic, we would like to refer to our specialist articles Agile vs. classic software development, Software reengineering: When does the old system become a problem? and Modernisation of software, as these approaches and ideas were also applied here.

Possible solutions

The starting point was a classic desktop application that was realised in C++ based on the Microsoft Foundation Classes (MFC). OpenGL was used for the 3D representation. The goal was to make the application additionally operable via a web browser. In principle, there are two possibilities for porting the native HOTINT C++ application to a web application:

A pure client-side web application that would have to be completely re-implemented using web technologies without re-using any source code or, alternatively, a semi-automated port of the C++ application into a web application using WebAssembly and Emscripts.

As a second approach, a client/server-based web application can be implemented, with HOTINT running as a C++ server and communicating with the client-side web application (communication protocol for structured data exchange: parameters, result data, 3D visualisation, mouse interaction). The 3D visualisations can be transmitted either in the form of structured data (complete transmission or only of changed parts) or directly in the form of an image (image streaming).

A purely client-side web application was ruled out in advance, as a complete new implementation would have exceeded the project scope. Semi-automated porting was not possible due to the use of closed-source libraries from Intel, Microsoft and others. Another possibility would be to realise the project on the basis of ParaView, which already offers a client/server-based web application (link to ParaView web application). There are two possibilities for 3D visualisation:

- Pure client-side rendering of the 3D scene

- Server-side rendering of the 3D scene and transfer of the image to the client (image stream).

It is also possible to use both variants and perform an overlay in the client (hybrid 3D visualisation). ParaView uses the VTK library for visualisation. The necessary infrastructure for 3D visualisation on the web based on a client/server architecture is already provided by VTK (link to vtkWeb). The 3D visualisation for the client can be calculated purely on the server (image stream), purely on the client or on both (hybrid). The decision for a variant depends on the complexity of the 3D scene to be transmitted and the associated data volume.

Implementation concept

For the chosen solution approach, in the form of a client/server architecture, the rendering of the 3D scenes had to be adapted. In the solution approach, the idea was to realise the client-side rendering exclusively with the help of the VTK library. In the course of implementation, however, it turned out that the internal rendering interface could not be converted to an abstract description for VTK without great effort. Since the simulation kernel updates the 3D visualisation in the course of the animation in every time step, the mapping to a different rendering API would have been associated with performance losses.

Therefore, the following approach was chosen: The use of OpenGL was kept unchanged, but instead of the output on the screen, an image is generated (off-screen rendering) and transferred from the server to the client. This approach offers the following advantages:

- Basic rendering in HOTINT is not subject to major changes.

- Extensions and adaptations in the element routines of HOTINT can be carried out in the known manner.

- The changes only affect the new web backend itself, which means that the maintainability of HOTINT remains unchanged.

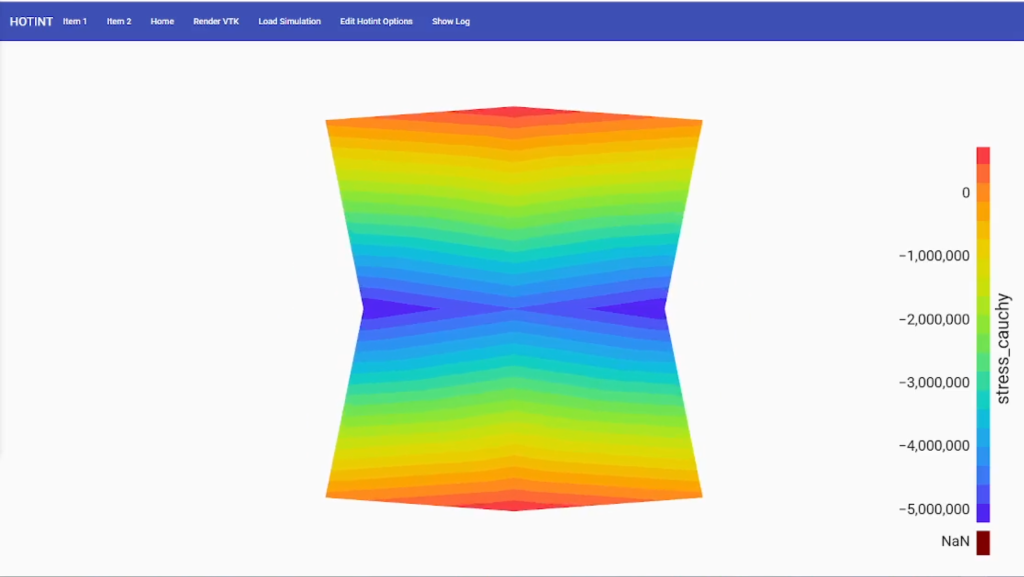

Since a purely image-based transfer of 3D views was not expedient for all use cases due to the communication bandwidth, the transfer of complete models was designed for certain use cases. In the case of FEM (Finite Element Method) models including calculation results, these are transmitted only once from the server to the client in the form of a VTK scene description. This allows the 3D view to be changed interactively in the client on the one hand and the visualisation of different result variables to be selected on the other, without having to transfer data from the server again.

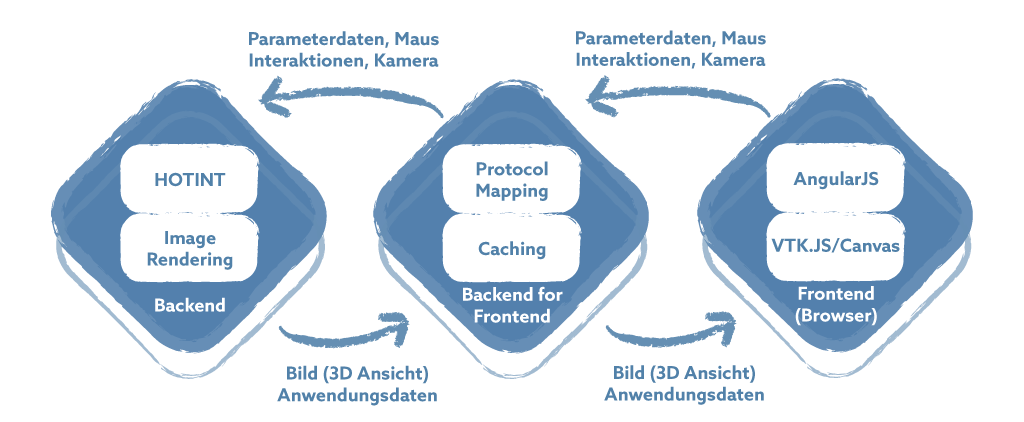

For the connection of the web clients, a Backend-For-Frontend (BFF) based on Node.js was realised. This approach was chosen because the integration of a complete web server would have been at odds with the minimally invasive adaptation of the existing application and, moreover, Node.js offers good support for gRPC and GraphQL. The backend makes the data available to the BFF in generic form via gRPC, which in turn delivers the data to the frontend via GraphQL. On the one hand, the BFF takes over the mapping of the different protocols and on the other hand, intelligent caching mechanisms can also be implemented. By using the BFF, the extensions in the backend (HOTINT) can be kept low. The following figure shows the implementation concept.

Figure 1: Implementation concept

Technical realisation and implementation

New findings in the course of implementation could have made changes and adjustments to the planned implementation concept necessary. Surprisingly, this was not necessary in the specific case – the concept could be implemented as planned without any major deviations. In HOTINT, only the following changes were necessary in the end:

- OpenGL Off-Screen Rendering

- Export / import of the parameter settings as JSON

- Extension by VTK scene generation for visualisation of FEM calculation results

The previous render code with OpenGL did not need to be adapted. The code base of HOTINT has a size of about 200,000 LOC (Lines of Code) with ~300 classes. The HOTINTWeb extension has about 2,000 LOC, while the adaptations to HOTINT have been very small with ~500 LOC (0.25 %).

The backend was implemented as planned with gRPC. The individual images for the image-based transmission of the 3D representation are created with offscreen rendering. The libraries GLFW , glbinding and glm were used for this. In order to reduce the data volume during the transmission of the individual images, they were compressed into JPG images using TurboJPEG.

The Backend-For-Frontend (BFF) was developed from scratch with Node.js. For communication with the backend / HOTINT, gRPC-JS was used. Apollo Server was used as the GraphQL framework, with GraphQL Mutations being used to control the simulation and GraphQL Subscriptions being used to transfer the images / messages.

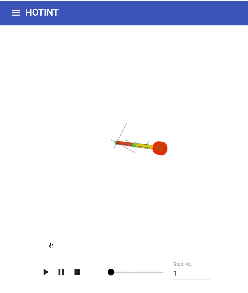

For the frontend, Angular was used in combination with Material Design. For the interaction with the 3D view, vtk.js is also used for the image transfer – with the advantage that camera interactions did not have to be re-implemented manually.

The interaction with the simulation roughly corresponds to the following procedure:

- Preparing the simulation environment (selecting the project file).

- Start the simulation run.

- During the simulation run, HOTINT continuously generates new time steps and messages. With the help of the backend and the BFF, these are communicated to the frontend.

- The frontend decides when new images are needed or when picking (selection of elements) should be started. Previous time steps can also be accessed here at any time.

- Completing or stopping the simulation.

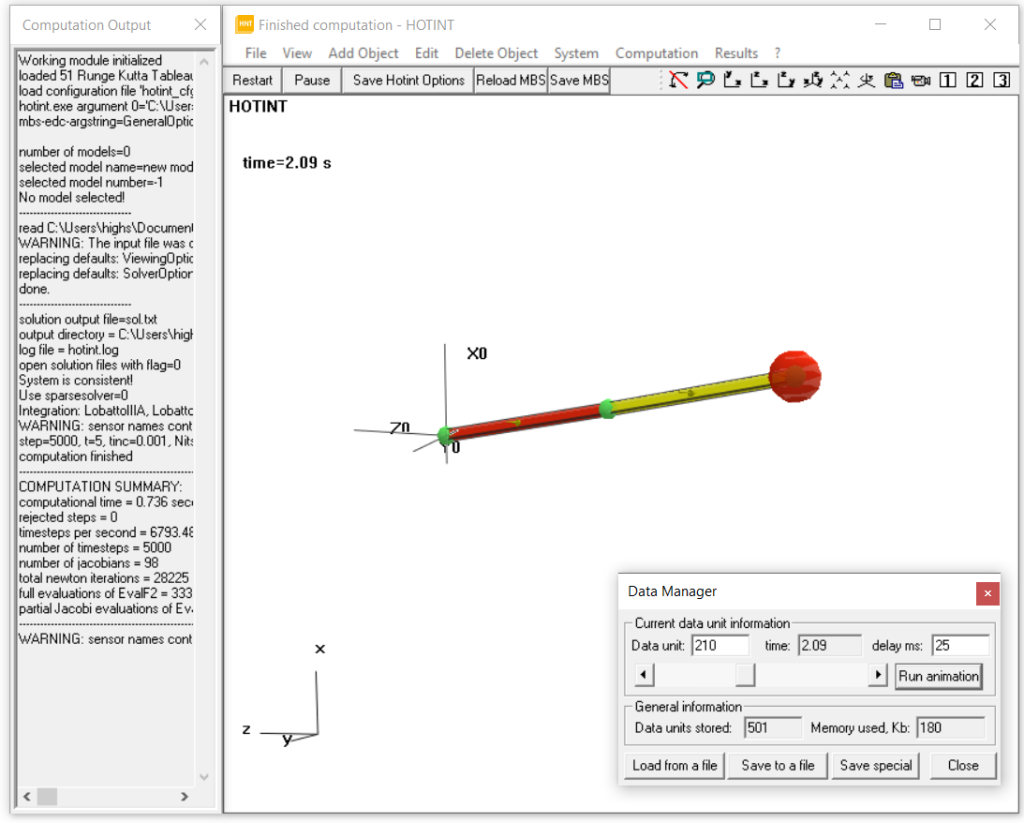

The following illustration compares the previous desktop application and the new web application:

Screenshot 1: old desktop application

Screenshot 2: new web version

Screenshot 1: old desktop application

Screenshot 2: new web version

Summary / Outlook

As mentioned at the beginning, the majority of user interfaces today are realised with web technologies, because these enable low-threshold access for a wide range of users. Consequently, classic desktop applications are now also confronted with this pressure of expectation. In this context, the question of an appropriate approach arises: complete new development or step-by-step modernisation (reengineering). The decision must, of course, be made individually for each project and depends heavily on the scope and quality of the existing code base. However, it is not the case that a new development is generally the better solution. In the project presented, the chosen minimally invasive reengineering approach and the agile approach have proven their worth. The expectations in terms of results and effort were even exceeded, as the project was completed within a lead time of about three months and an effort of about 500 person hours.

In summary, the following factors were decisive for the success:

- The agile approach and the regular joint coordination of interim results and the further course of action.

- The minimally invasive approach chosen.

- Future-proof, because existing code and extensions can be maintained and developed independently of each other.

Authors

Martin Hochstrasser, MSc

Software Developer

DI (FH) Josef Jank, MSc

Senior Software Architect & Project Manager

DI (FH) Alexander Leutgeb

Head of Unit Industrial Software Applications