Project SIGNDETECT: Automated Traffic Sign Detection in Upper Austria

Innovative AI project optimizes the detection of traffic signs for road administration

RISC Software GmbH has successfully completed a project on the automated detection and precise localization of traffic signs. In cooperation with the Directorate for Road Construction and Traffic of the Province of Upper Austria, the use of existing RGB video data from the RoadSTAR recording vehicle was tested for AI-supported detection, classification, and localization of traffic signs. Using machine learning and stereo camera analysis, a system was developed that detects traffic signs with high accuracy and determines their position in real-world coordinates. This enables more efficient and precise management of road databases and forms the basis for future innovations in traffic monitoring.

Precise and automated localization of traffic signs in road traffic

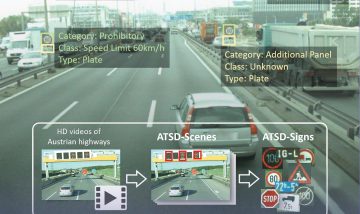

The Province of Upper Austria regularly records the condition of its roads using the RoadSTAR recording vehicle from the Austrian Institute of Technology. Among other things, a calibrated stereo camera system is used, which records the drive as RGB videos. On behalf of the Directorate for Road Construction and Traffic of the Province, RISC Software GmbH tested the extent to which these videos can be used to automatically detect (where in the image they are located) and classify (which traffic signs they are) as well as accurately locate them (where in the world they are). This task involves several steps, shown in Figure 1.

Figure 1: Steps of traffic sign detection and localization

Creation of a traffic sign dataset

In the first step, all traffic signs in the videos must be reliably detected. A representative dataset for Austrian roads was created from several thousand images, some selected from public datasets, others newly annotated. A highlight is the public dataset Austrian Highway Traffic Sign Data Set (ATSD), which contains a large number of traffic signs on Austrian highways and was created as part of the earlier research project SafeSign.

Model training

This dataset was used to train a model that met the requirements for both speed and accuracy. The trained detection model provides the positions of traffic signs in each image of the left and right videos as bounding boxes, as well as their class (e.g., yield), see also Figure 2. This is the prerequisite for precise spatial localization.

Figure 2: Example image with detected traffic signs. Each sign is surrounded by a bounding box, and the model’s confidence (here 100%) and the class are also displayed.

Point matching & 3D position estimation

A stereo camera system allows the estimation of a point’s depth in space—provided that the corresponding position is known in both images. Image areas are matched to identify pixel-accurate correspondences (see Figure 3). This allows the calculation of the disparity (in pixels), which is the distance between the points in the left and right image. A familiar example is human depth perception: Our eyes are a fixed distance apart and thus capture slightly different images. If we look at a finger and alternately close the left and right eye, we see the finger shift relative to the background—this shift depends on the distance and is called disparity.

Our brain uses this to determine distance. Since the camera distance (baseline) and properties are known in a stereo system, disparity can be converted into distance. And once the relative position to the camera is known, real-world coordinates can also be calculated—provided the GPS position of the camera is known, as with the RoadSTAR truck.

Figure 3: Matching identifies pixel-accurate positions in the left and right image.

Spatial clustering

In the next step, all instances of the same traffic sign are grouped. This is necessary because each sign is recorded multiple times when passing by. Each must be reliably recognized again—especially if there are multiple identical signs in the image. The estimated real-world coordinates are used for this, which also help identify outliers that were incorrectly located (e.g., due to wrong point matches from partially occluded signs).

Text recognition

In a final step, traffic signs containing textual information are processed. To extract text, OCR models are used. Many pre-trained models are available, such as Tesseract, or vision-language models like Florence-2, which deliver excellent results with proper preprocessing. See Figure 4 for an example of text extraction on kilometer markers, which are particularly challenging due to their small size.

Figure 4: OCR examples, the recognized text is displayed above the image section.

Output of detection data & visualization

All steps together form a pipeline that can automatically detect and localize existing traffic signs across any number of videos. The generated data can be used for visualizations, identifying problematic signs—and most importantly—for improving the position data of traffic signs in the Province’s road databases.

Project Partners

Project Details

- Project Short Title: SIGNDETECT

- Project Full Title: Automated traffic sign detection for road administration

- Project Partner:

- Province of Upper Austria, Directorate for Road Construction and Traffic

- Total Budget: 25,000 EUR

- Project Duration: 09/2024–12/2024 (4 months)

Contact Person

Project Lead

Dr. Felix Oberhauser

Data Scientist & Researcher