MCP server: The connecting fabric between AI, data and tools

by Patrick Kraus-Füreder

How the Model Context Protocol securely connects AI systems with files, APIs and technical systems

AI systems are increasingly becoming active players: they not only read data, but also trigger workflows, process tickets, analyze measured values and interact directly with technical systems. For such applications to function securely, traceably and across systems, a standard is needed that reliably connects models with files, APIs, repositories or edge devices. This is precisely the role of the Model Context Protocol (MCP) – an open protocol that cleanly decouples AI clients and enterprise systems and connects them via clearly defined tools, resources and policies. The following article shows why MCP is becoming relevant for modern industrial, engineering and IT environments, how MCP servers work and which architecture and security principles are crucial in practice.

Contents

- Why MCP is relevant now

- What MCP is – and what an MCP server does

- Architecture – from the client host to the server topology

- Security, governance & quality – more than “just a tool call”

- Industrial Grade: Edge, latency & data sovereignty

- Interoperability & ecosystem

- Typical fields of application (industry)

- Performance and operational aspects

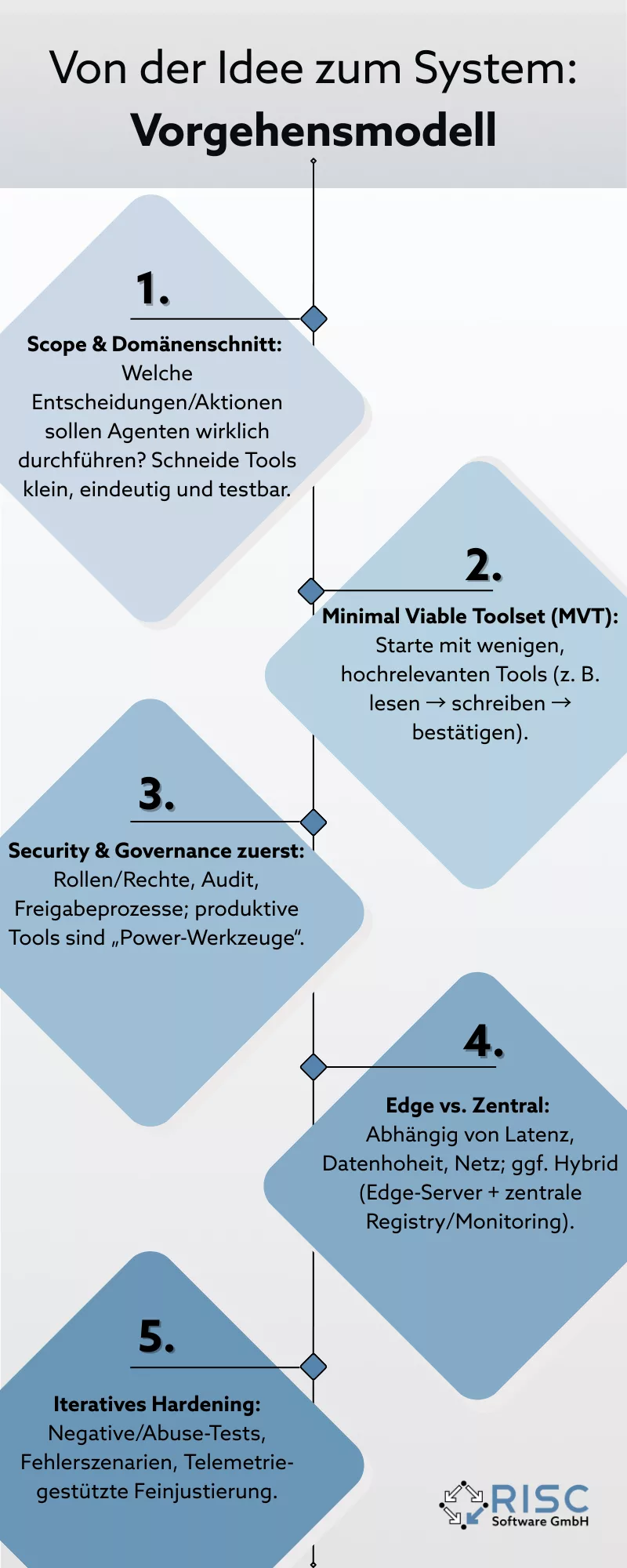

- From the idea to the system: process model

- Does MCP make sense for us?

- Sources (selection)

- Contact us

- Author

- Read more

In short, modern AI applications need more than just a strong model. They need reliable, secure and standardized access to data sources, tools and workflows – on-prem, in the cloud and increasingly at the edge. This is precisely where the

Why MCP is relevant now

Three developments have overlapped in the last 18-24 months that now make MCP strategically relevant. Firstly, the

What MCP is – and what an MCP server does

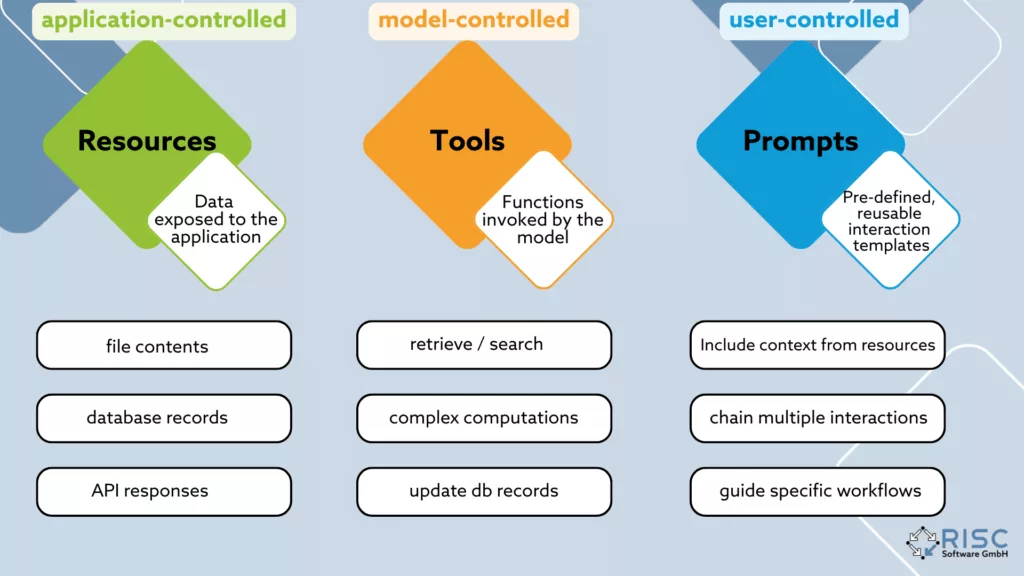

MCP standardizes the connection between a client – i.e. an AI application or an agent – and a server that acts as an adapter into the existing system. An MCP server bundles

Architecture – from the client host to the server topology

A typical MCP setup consists of an AI-enabled application – such as an IDE plugin, a chat frontend or a desktop app that acts as an MCP client and triggers all tool calls. Examples of this are

| Sample | Description | Correspondence in software architecture | Source |

| Adapter server | Connects an existing system or API to the MCP protocol. Reduces integration effort, separates logic and interface. | Adapter pattern / ports-and-adapters architecture | Medium, Wikipedia |

| Proxy / Aggregator | Combines several backends or servers under a common interface, enables request routing or data aggregation. | API gateway and aggregator pattern | microservices.io, Medium |

| Domain server | Organizes tools according to business domains instead of source systems, promotes semantic coherence and reuse. | Domain Service and Bounded Context Principle (DDD) | Wikipedia |

Security, governance & quality – more than “just a tool call”

With MCP, the operational reach of an agent grows , which significantly increases the requirements for security, governance and quality . Precise control of authentication and authorization is key, so that only those functions are activated that are really necessary in the respective environment and cover exactly the permitted actions.

Industrial Grade: Edge, latency & data sovereignty

Many industrial workflows require low latency and high availability – ideal for edge MCP servers close to the machine (e.g. on the line), combined with centralized services for registry, observability and policy. Advantage: Data does not leave the zone, decisions remain fast and integration into OT/IT networks remains controllable. The latest integration paths in common AI clients also improve the remote server model when Edge is not required.

Interoperability & ecosystem

The official MCP portal and the GitHub organization modelcontextprotocol bundle specification, SDKs and documentation of the Model Context Protocol. Growing lists of example and product servers facilitate selection and integration. A prominent reference example is the

Typical fields of application (industry)

In the industrial sector, MCP is particularly strong where structured, auditable and repeatable interactions between AI agents and existing systems are required. A large block concerns knowledge access: technical manuals, test plans or change logs can be connected via standardized resource and search tools, and as soon as special requirements arise, a classic RAG system is usually no longer sufficient – a dedicated MCP tool then becomes the logical next step. In quality assurance and QA pipelines , agents can retrieve measurement data, start image or signal analyses, store versioned results and escalate automatically in the event of deviations. In

Performance and operational aspects

The performance of an MCP system is essentially determined by three factors: pre- and post-processing at CPU level, the latency of the connected backends and networks and – if models are executed within the server or complex transformations are carried out – the local GPU or NPU load. For productive environments, it has been shown that a consistently asynchronous architecture with clearly defined timeouts and circuit breakers per tool is crucial. Equally important is an observability strategy that records latencies, error rates and tool usage patterns as standard and correlates them with user or session data using structured logs. Stability over longer operating phases is achieved through clean

Sources (selection)

- Official MCP documentation & website: Definition, concepts (tools/resources/prompts), specification & protocol. modelcontextprotocol.io Tools – Model Context Protocol MCP GitHub

- Anthropic – Introduction & Integrations: Architecture, Motivation; Remote MCP Server & Client Support. Anthropic Anthropic

- Ecosystem & Registry: GitHub organization, official GitHub MCP server, registry announcements/articles. MCP GitHub Github MCP Server GitHub MCP Registry

- Security research: Initial studies on risks/exploits and maintainability in the MCP context. MCP Blog

- Community/Guides: Example server overviews, learning resources. MCP Example Servers GitHub MCP Resources

- Awesome lists: Community-curated lists of available MCP servers. AppCypher GitHub Wong2 GitHub PunkPeye GitHub MCP Examples

Contact us

Authors

DI Dr. Patrick Kraus-Füreder, BSc

AI Product Manager